Nvidia Smi Output

Active 3 months ago.

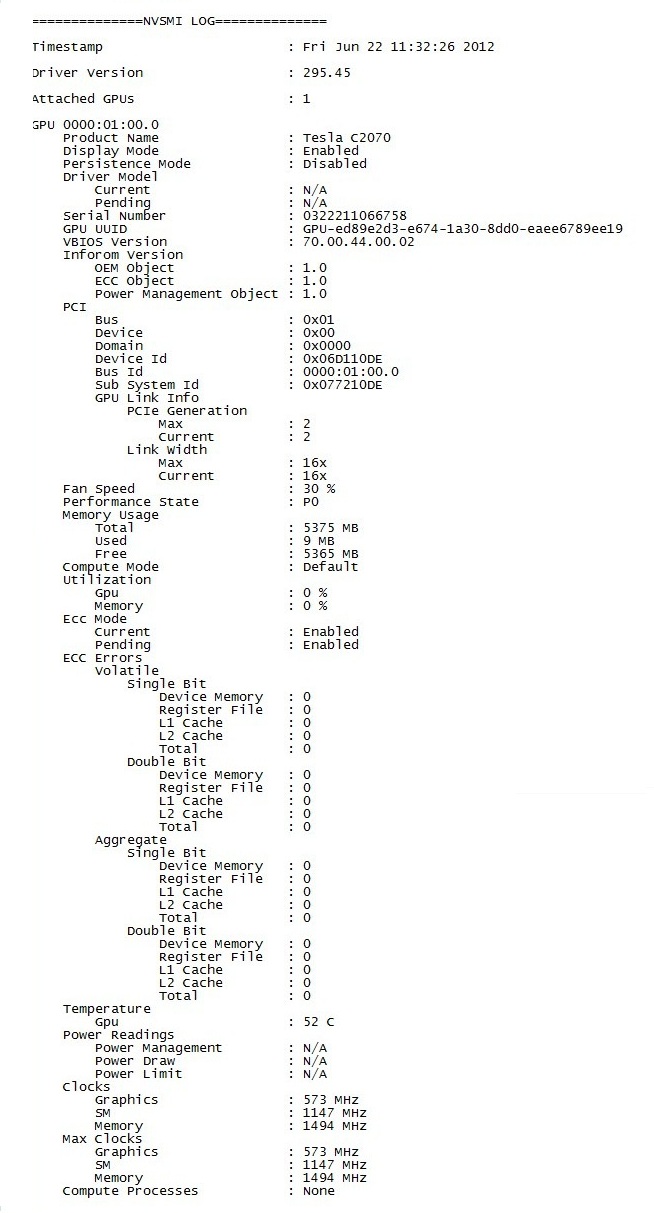

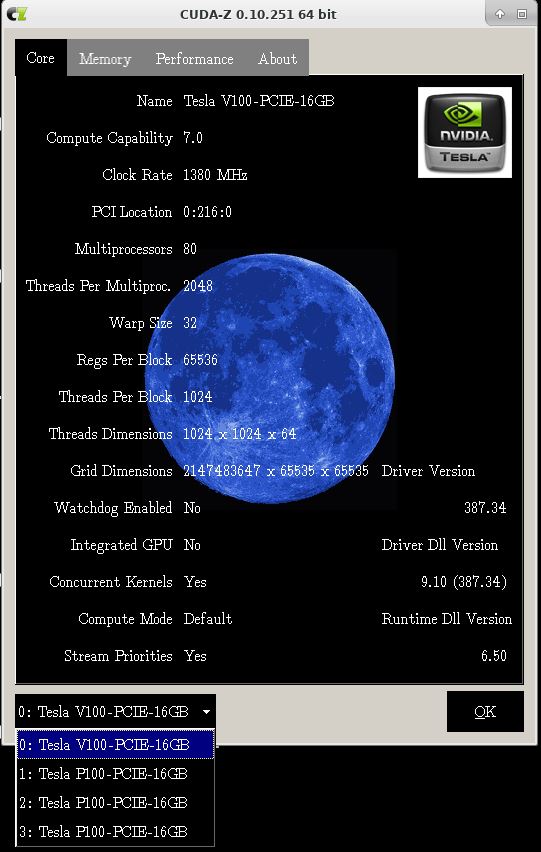

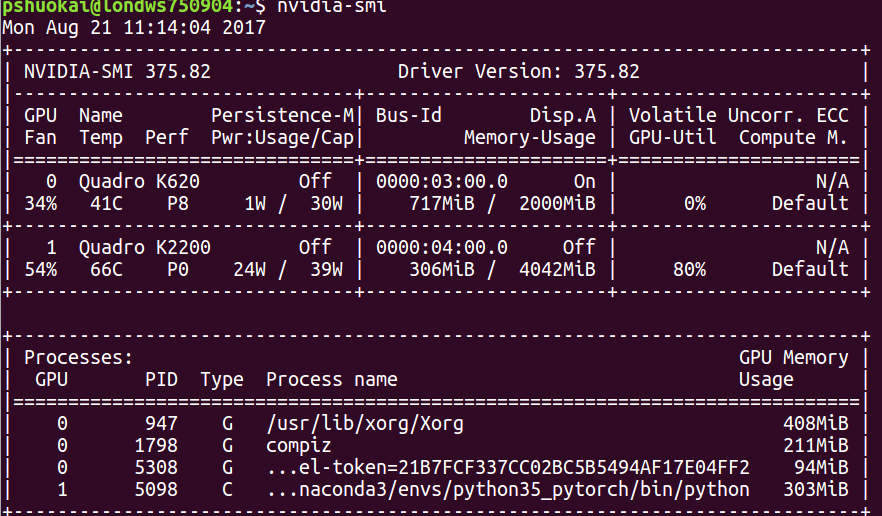

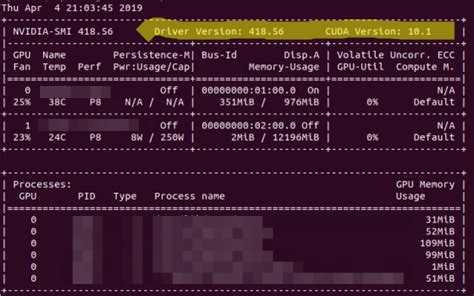

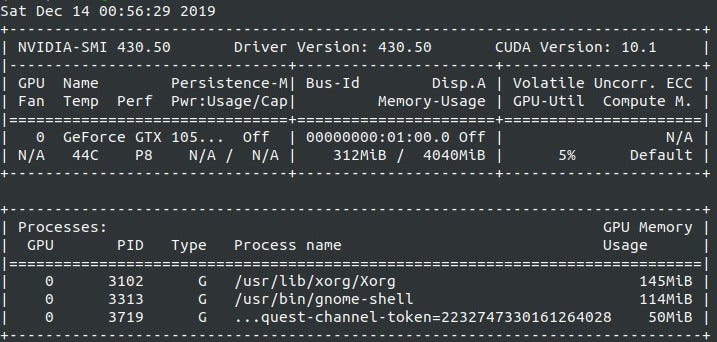

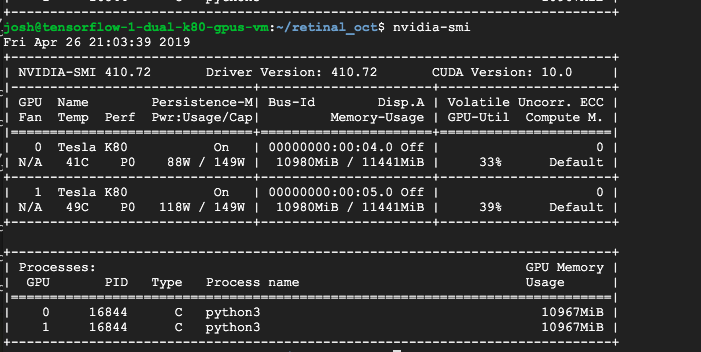

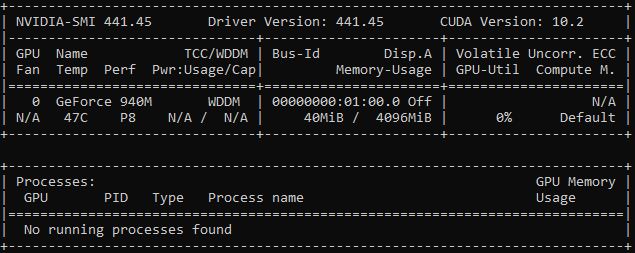

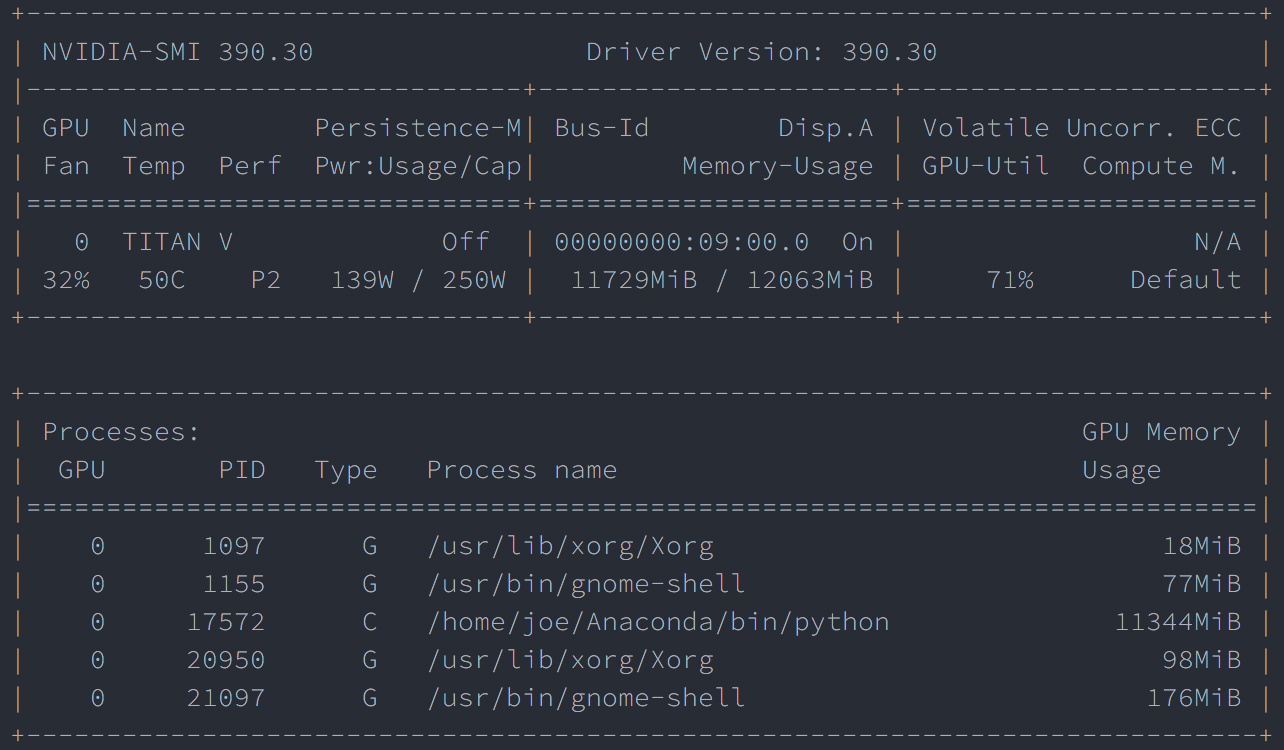

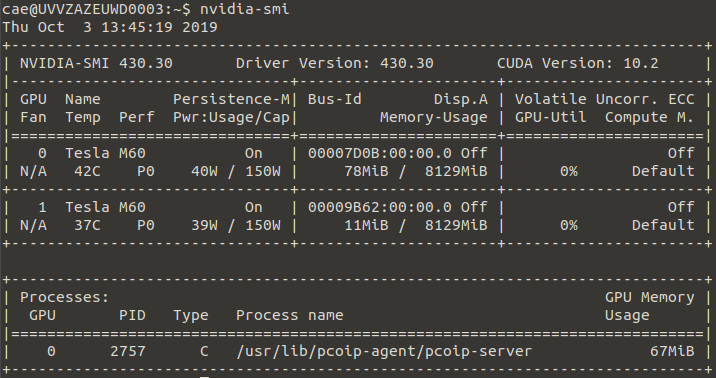

Nvidia smi output. Nvidia-smi.txt Page 5 for production environments at this time. Nvidia-smi There is a command line utility tool, nvidia-smi (also NVSMI) which monitors and manages NVIDIA GPUs such as Tesla, Quadro, GRID and GeForce. Either in an interactive job or after connecting to a node running your job with ssh, nvidia-smi output should look something like this:.

The output of NVSMI is not guaranteed to be backwards compatible. To display the GPU temperature in the shell, use nvidia-smi as follows:. Therefore, we can learn about which information is collected.

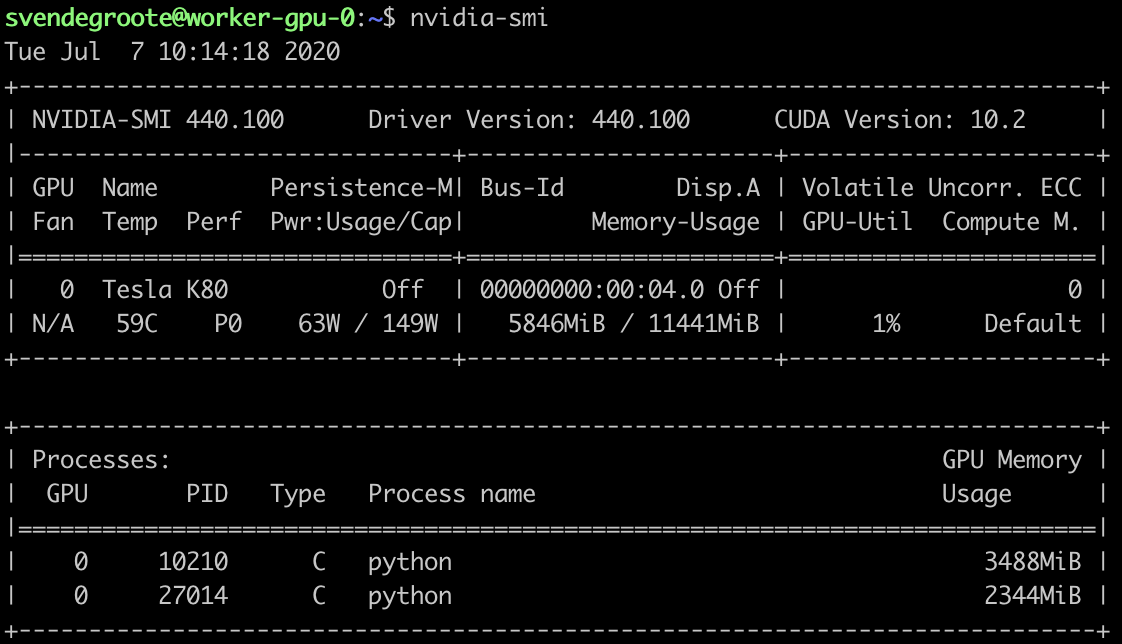

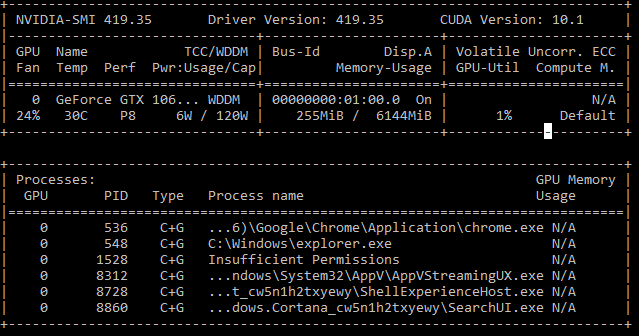

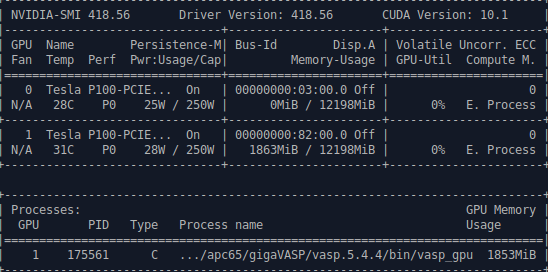

Also the output of 'nvidia-smi' looks quite good:. @zeekvfu I think it'd be better to explain what does the -d flag do – donlucacorleone Oct 10 '18 at 14:41. 10bits output for Davinci Resolve.

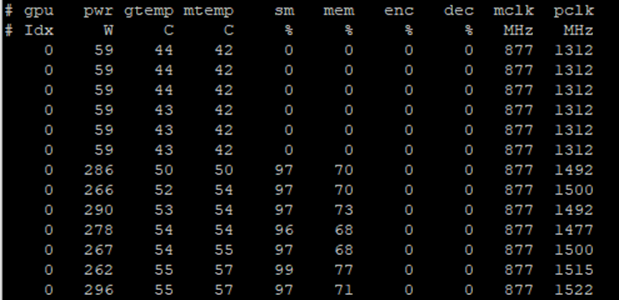

We can obtain GPU information such as utilization, power, memory, and clock speed stats. Think it was an experimental command. Thank you all for all the help :).

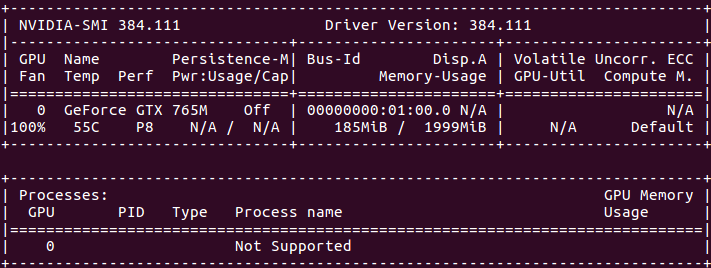

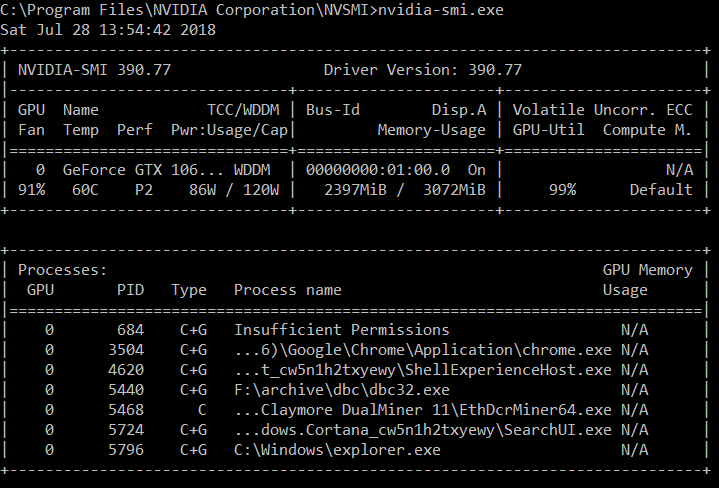

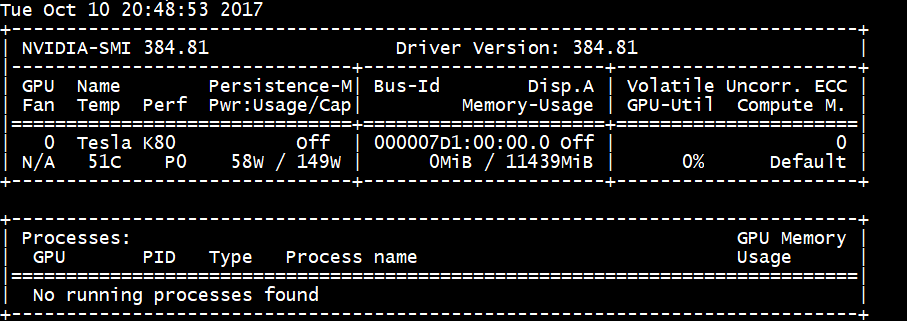

Nvidia-smi can report query information as XML or human readable plain text to either standard output or a file. I’m having trouble installing CUDA for my setup due to a driver compatibility issue with nvidia driver version 384.111. When typing nvidia-smi, I get information as shown by the attached image.

Nvidia−smi(1) NVIDIA nvidia−smi(1) −f FILE, −−filename=FILE Redirect query output to the specified file in place of the default stdout. Nvidia-smi is a command line utility, based on top of the NVIDIA Management Library (NVML), intended to aid in the management and monitoring of NVIDIA GPU devices. "C:\Program Files\NVIDIA Corporation\NVSMI\nvidia-smi.exe" -q -x Please include the output of this command if opening an GitHub issue.

Enabling GPU Support for NGC Containers To obtain the best performance when running NGC containers, three methods of providing GPU support for Docker containers have been developed:. Troubleshooting the vGPU within the VM can be done using the same nvidia-smi command, it gives an output of which processes are using the vGPU and the usage of memory and compute resources. Note that for brevity, some of the nvidia-smi output in the following examples may be cropped to showcase the relevant sections of interest.

Is it the number of cuda cores in running, or something else?. It's installed together with the CUDA Toolkit. In Figure 3, MIG is shown as Disabled.

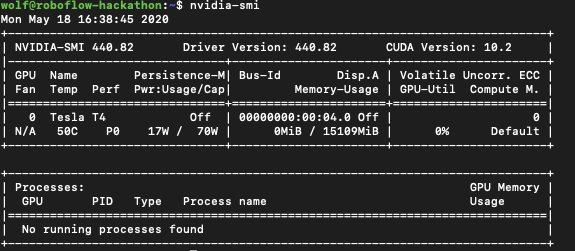

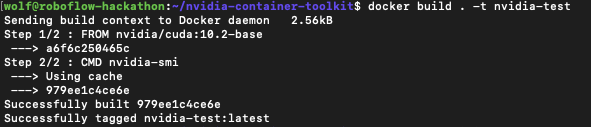

Watch -d -n 0.5 nvidia-smi will be even better. Below is an output of “nvidia-smi” command line. Testing nvidia-smi through the Docker container This is the moment of truth—if this command runs correctly, you should see the nvidia-smi output that you see on your machine outside of … - Selection from Generative Adversarial Networks Cookbook Book.

We can take one or more GPUs availabile for computation based on relative memory usage, ie. On the vSphere host, once it is taken into maintenance mode, the appropriate NVIDIA ESXi Host Driver (also called the vGPU Manager) that supports MIG can be installed. Higher-complexity systems require additional care in examining their configuration and capabilities.

On the other hand, this format is not helpful if we wish to monitor the GPU's status continuously. Can't get full colors with HDMI. Nvidia-smi (NVSMI) is NVIDIA System Management Interface program.

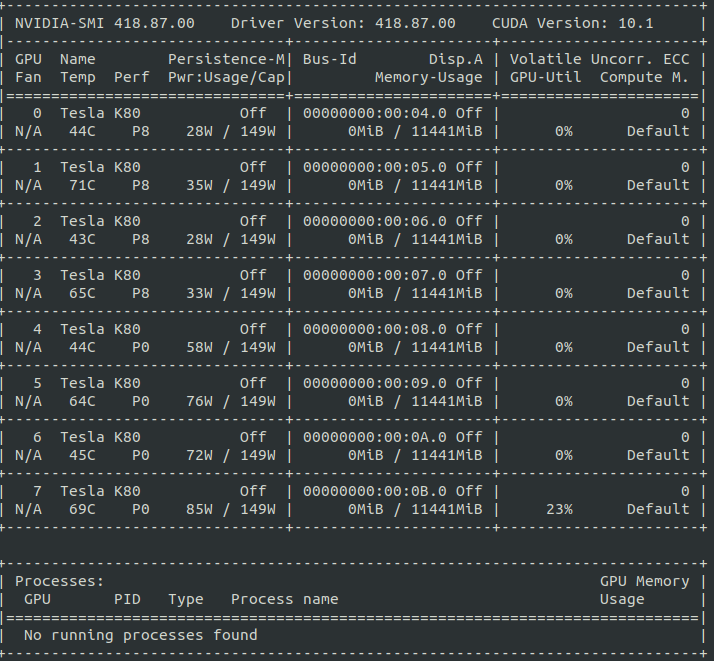

Success nvidia-smi 8x GPU in Supermicro SuperBlade GPU node. Fix incorrect uptime when clock is adjusted. User@gpu01 ~ $ nvidia-smi +-----+ | NVIDIA-SMI 418.87.00 Driver Version:.

Both GPU and Unit query outputs conform to corresponding DTDs. Nvidia-smi provides tracking and maintenance features for all of the Tesla, Quadro, GRID and GeForce NVIDIA GPUs and higher architectural families in Fermi. 59 @Bar Good tip.

Nvidia-smi -i 0,1,2 * Added support for displaying the GPU encoder and decoder utilizations * Added nvidia-smi topo interface to display the GPUDirect communication matrix (EXPERIMENTAL) * Added support for displayed the GPU board ID and whether or not it is a multiGPU board * Removed user-defined throttle reason from XML output. I'm not sure what exactly the "Volatile GPU-Util" means. Nvidia-smi – this is the command to be run on the container You should get output that looks like the below:.

Overall Syntax From nvidia-smi nvlink -h root@localhost ~# nvidia-smi nvlink -h nvlink -- Display NvLink information. Monitor and Optimize Your Nvidia GPU in Linux. Nvidia-smi output meaning Showing 1-10 of 10 messages.

10.1 | |. Example output of nvidia-smi command showing one GPU and a process using it. It is an application that queries the NVML (NVIDIA Management Library).

You can also run nvidia-smi -h to see a full list of customization flags. At the end of the process_h. Sometimes these commands can be a bit tricky to execute, the nvidia-smi output below and specific examples should help anyone having trouble with the -i switch for targeting specific GPU IDs.

Connect to the Windows Server instance and use the nvidia-smi.exe tool to verify that the driver is running properly. Add video codec stats. Unknown GPU memory user in nvidia-smi output.

Watch -n 0.5 nvidia-smi, will keep the output updated without filling your terminal with output. Check the full output by running nvidia-smi binary manually. Sudo -u telegraf -- /usr/bin/nvidia-smi -q -x Windows:.

Added new "GPU Max Operating Temp" to nvidia-smi and SMBPBI to report the maximum GPU operating temperature for Tesla V100 Added CUDA support to allow JIT linking of binary compatible cubins Fixed an issue in the driver that may cause certain applications using unified memory APIs to hang. In some situations there may be HW components on the board that fail to revert back to an initial. Mostly because the command "nvidia-smi -lso" and/or "nvidia-smi -lsa" doesn't exist anymore.

Here's the commands I found and tested & the output:. The Amazon EKS optimized Amazon Linux AMI is built on top of Amazon Linux 2, and is configured to serve as the base image for Amazon EKS nodes. It is installed along with CUDA toolkit and provides you meaningful insights.

It is OK with Xorg taking a few MB. So that I can use "grep" to select a GPU and set the variable "newstring" (the temp. Ask Question Asked 3 months ago.

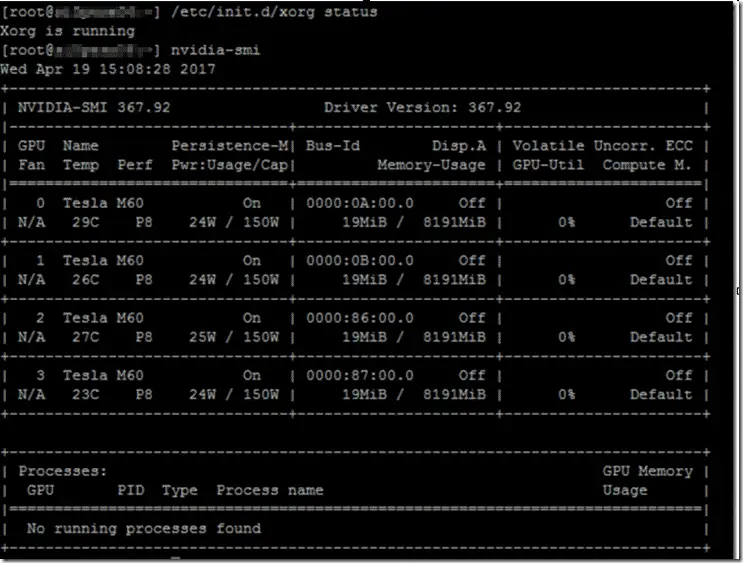

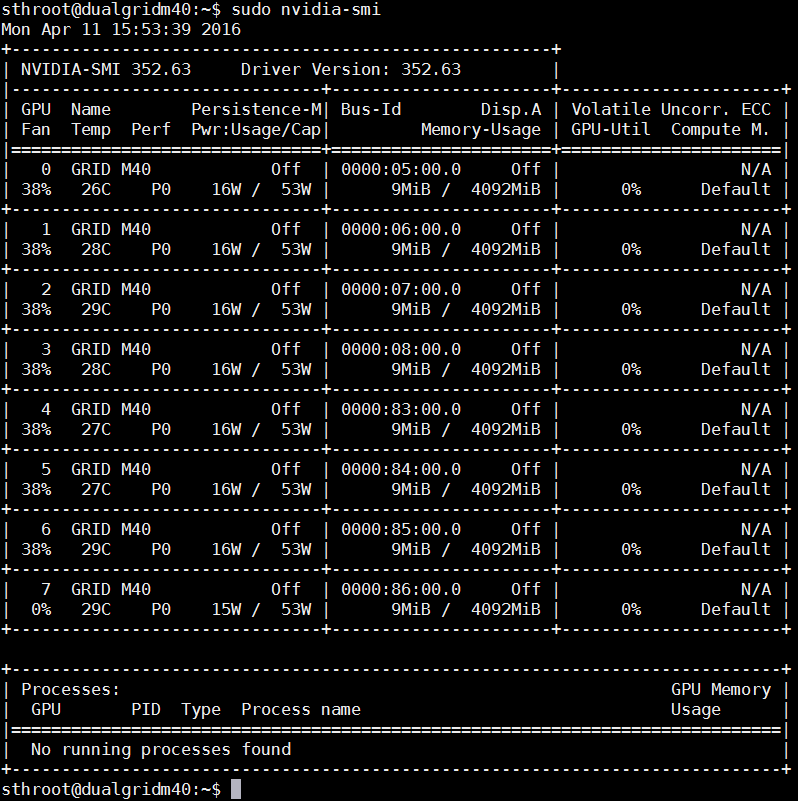

Nvidia-smi showing that the X server is running on the NVIDIA eGPU with other usage metrics. Here is the nvidia-smi output with our 8x NVIDIA GPUs in the Supermicro SuperBlade GPU node:. | NVIDIA-SMI 384.73 Driver Version:.

Log = NVLog () print ( log 'Attached GPUs' 'GPU :04:00.0' 'Processes' 0 'Used GPU Memory' ). I have an Ubuntu machine (16.04) with a GPU. There is a command line utility tool, nvidia-smi (also NVSMI) which monitors and manages NVIDIA GPUs such as Tesla, Quadro, GRID and GeForce.

This is a simple script which runs nvidia-smi command on multiple hosts and saves its output to a common file. When running nvidia-smi I get this result (all applications are closed):. The results should display the utilization of at least one GPU, the one in your eGPU enclosure.

The header contains information about versions of drivers and APIs used. In addition we have a fancy table of GPU with more information taken by python binding to NVML. It’s a quick and dirty solution calling nvidia-smi and parsing its output.

For more information on the MIG commands, see the nvidia-smi man page or nvidia-smi mig --help. MIG in Disabled Mode – seen on the fourth and seventh rows at the far right side of the nvidia-smi command output. It parses and maps the output of nvidia-smi -q into a Python object.

This is output of nvidia-smi Fri Apr 5 00:45:57 1 …. 384.73 | | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. The specified file will be overwrit- ten.

When running Wayland or on a headless server. I've been trying for a couple of days, but have had no luck. Nvidiagpubeat is an elastic beat that uses NVIDIA System Management Interface (nvidia-smi) to monitor NVIDIA GPU devices and can ingest metrics into Elastic search cluster, with support for both 6.x and 7.x versions of beats.

Add SNMPv3 trap support. This is the result of an empty Windows Server 16 VM with 1 session without special graphical load:. Note that the Pull complete portions (The parts above the nvidia-smi output) are a one time occurrence as the image is not on your system locally and is being fetched to launch the image into a container instance.

# # Test nvidia-smi with the latest official CUDA image # docker run --runtime=nvidia --rm nvidia/cuda nvidia-smi # # When using Debian GNU/Linux 9.4 (Stretch) as your host machine,. Docker run --runtime=nvidia --rm nvcr.io/nvidia/cuda nvidia-smi 3.3. – zeekvfu Jan 17 '18 at 16:27.

Then I tried to run some tensorflow-gpu test and it was running on GPU (as showed by nvidia-smi)!. For most functions, GeForce Titan Series products are supported with only a. For more details, please refer to the nvidia-smi documentation.

Use nvidia-smi which can read temps directly from the GPU without the need to use X at all, e.g. You can get the attributes like:. It is also known as NVSMI.

A header with a GPU list and a process list. How-ever, both NVML and the Python bindings are backwards compatible, and. I'm running MLP with Pylearn2/Theano on HPC.

$ nvidia-smi This should output something similar to the following:. "C:\Program Files\NVIDIA Corporation\NVSMI\nvidia-smi.exe" The output resembles the following:. The goal here is to make it run asynchronously.

Hi all, I have followed the instructions at and installed nVidia drivers and CUDA Toolkit. As a hint here, in most settings we have found sudo to be important. Upon reboot, test nvidia-smi with the latest official CUDA image.

Figure 2 —Example output of nvidia-smi The output is split into 2 parts:. Add missing nvme attributes. If you’re attempting to report an issue with your GPU, the information from Nvidia-SMI can be easily saved straight to a file using a command like nvidia-smi > nvidia-output.txt.

* Running nvidia-smi without -q command will output non verbose version of -q instead of help * Fixed parsing of -l/--loop= argument (default value, 0, to big value) * Changed format of pciBusId (to XXXX:XX:XX.X - this change was visible in 280). $ nvidia-smi output Figure 1. Nvidia-smi¶ nvidia-smi is the de facto standard tool when it comes to monitoring the utilisation of NVIDIA GPUs.

The AMI is configured to work with Amazon EKS and it includes Docker, kubelet , and the AWS IAM Authenticator. – Bar Jul 14 '16 at 18:26. Nvidia-smi reports structured output when we use the --query (-q) option.

It is installed along with CUDA toolkit and provides. After the reboot, nvidia-smi was output (as I said on the question's update). Viewed 91 times 0.

* Getting pending driver model works on non-admin * Added support for running nvidia-smi on Windows Guest accounts * Running nvidia-smi without -q command will output non verbose version of -q instead of help * Fixed parsing of -l/--loop= argument (default value, 0, to big value) * Changed format of pciBusId (to XXXX:XX:XX.X - this change was. −x, −−xml−format Produce XML output in place of the default human−readable format.

Linux Top Command In The Command And Nvidia Smi Programmer Sought

Nvidia System Management Interface Nvidia Developer

Testing Nvidia Smi Through The Docker Container Generative Adversarial Networks Cookbook Book

Nvidia Smi Output のギャラリー

A Guide To Gpu Sharing On Top Of Kubernetes By Sven Degroote Ml6team

Nvidia Disappeared After Ubuntu Restart Nvidia Smi Has Failed Because It Couldn T Communicate With The Nvidia Driver Programmer Sought

Nvidia Rendering Issues Look At The Stats Yellow Bricks

How To Use The Gpu Within A Docker Container

3 Output Of Nvidia Smi For Sample Experiment Download Scientific Diagram

Explained Output Of Nvidia Smi Utility By Shachi Kaul Analytics Vidhya Medium

Gpu Transcoding Slow Linux Emby Community

How To Setup An Egpu On Ubuntu For Tensorflow

Virtual Gpu Software User Guide Nvidia Virtual Gpu Software Documentation

Benchmark 10x Tesla P100 Gpu Nvtp100 16 General Discussion Linus Tech Tips

Issues In Installing Nvidia 390 396 Driver On Ubuntu 18 04 Ziheng Jensen Sun

Gpu Nvidia Passthrough On Proxmox Lxc Container Passbe Com

Reset Memory Usage Of A Single Gpu Stack Overflow

Running Gpu Accelerated Kubernetes Workloads On P3 And P2 Ec2 Instances With Amazon Eks Aws Compute Blog

Pushing The Nvidia Grid Vib To Vsphere Using Update Manager For Vgpus Vcloudinfo

Choose Gpu To Be Used In Ffmpeg Computer How To

A Top Like Utility For Monitoring Cuda Activity On A Gpu Stack Overflow

Nvidia Smi Doesn T Display Gpu Device In Order Nan Xiao S Blog

Perform Gpu Cpu And I O Stress Testing On Linux

How To Explain This Figure About Nvidia Smi With Nvidia Gpus Stack Overflow

3 Output Of Nvidia Smi For Sample Experiment Download Scientific Diagram

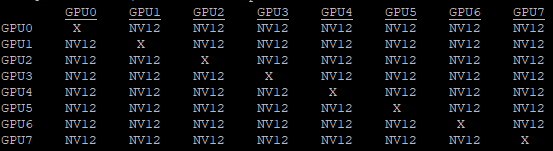

Understanding Nvidia Smi Topo M Output Stack Overflow

Monitoring Of Gpu Usage With Tensorflow Models Using Prometheus

View Nvidia Graphics Card Memory Usage In Real Time Under Ubuntu Programmer Sought

Very Low Gpu Usage During Textclassifier Training Issue 423 Flairnlp Flair Github

How To Install Nvidia Driven Rtx 80 Ti Gpu On Ubuntu 16 04 18 04 Programmer Sought

Up And Running With Ubuntu Nvidia Cuda Cudnn Tensorflow And Pytorch By Kyle M Shannon Hackernoon Com Medium

How To Use The Gpu Within A Docker Container

Attach A Gpu To A Linux Vm In Azure Stack Hci Azure Stack Hci Microsoft Docs

Multi Gpu Training Hangs Issue 448 Nvidia Openseq2seq Github

18 04 Is My Nvidia Driver Working Or Not Ask Ubuntu

Tapping Into My New Gpu From Kubeflow

Rdwrt Does Anyone Know How To Get The Cuda Version Output As Csv Output From An Nvidia Smi Query Nvidia Smi Help Query Gpu Isn T Giving Me Helpful Results Currently Workaround With Nvidia Smi Q

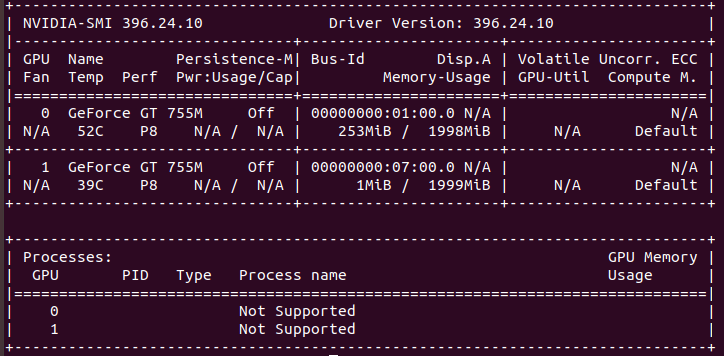

Geforce Card Not Showing Ultilization Percentage Or Process Names In Nvidia Smi Unraid

Cuda Out Of Memory Transfer Learning Vision Pytorch Forums

Installing Cuda And Cudnn On Ubuntu 16 04 Lts Mc Ai

Using Gpus With Virtual Machines On Vsphere Part 4 Working With Bitfusion Virtualize Applications

Why The Two Gpus On My Machine Have The Same Id So That Pytorch Can Only Choose One Pytorch Forums

Monitoring The Framebuffer For Nvidia Grid Vgpu And Gpu Passthrough

Pytorch Gpu Inference With Docker And Flask Papper S Coding Blog Have Fun Coding

Gpu Utilization Is N A When Using Nvidia Smi For Geforce Gtx 1650 Graphic Card Stack Overflow

Kernel With Keras Tensorflow Not Using Gpu Anymore Data Science And Machine Learning Kaggle

Cuda S Nvidia Smi Command Detailed Programmer Sought

How To Install A Linux Gpu Driver On The G2500 Server Huawei Server Os Installation Guide 29 Huawei

Gpu Server Troubleshooting Laptrinhx

Tensorflow Docker Nayan Blog

What Does Off Mean In The Output Of Nvidia Smi Stack Overflow

Nvlink On Nvidia Geforce Rtx 80 80 Ti In Windows 10

Nvidia Rendering Issues Look At The Stats Yellow Bricks

Explained Output Of Nvidia Smi Utility By Shachi Kaul Analytics Vidhya Medium

Short And Always Working Install Cuda On Gcp Vm Centos7 Centos8 Fun In It

Patterson Consulting A Practical Guide For Data Scientists Using Gpus With Tensorflow

How To Dedicate Your Laptop Gpu To Tensorflow Only On Ubuntu 18 04 By Manu Nalepa Towards Data Science

How To Monitoring Nvidia Gpu Utilization In Linux Computer How To

How To Monitor Nvidia Gpu Performance On Linux

Zhm9484 Manye

Q Tbn 3aand9gcta5cl6td46dxtpshllrsckjxb Suyoiaz1 Wasm0bzlfkgfazm Usqp Cau

Openpower Ibm S2lc Adding Nvidia Gpu Stats To Nmon Using Two Methods

Virtual Gpu Software User Guide Nvidia Virtual Gpu Software Documentation

Issues In Installing Nvidia 390 396 Driver On Ubuntu 18 04 Ziheng Jensen Sun

Some Gtx 1060 3gb Cards Have Low Hashrate With Ethash Coins Developernote Com

Azure N Series Gpu Driver Setup For Linux Azure Virtual Machines Microsoft Docs

Installing A Tesla Driver And Cuda Toolkit On A Gpu Accelerated Ecs Elastic Cloud Server User Guide Instances Optional Installing A Driver And Toolkit Huawei Cloud

Getting Your Hands Dirty On Ubuntu 18 04 Tensorflow 1 13 1 Cuda10 0 By Neeraj Vashistha Medium

Tensorflow Specifies The Gpu Training Model Programmer Sought

A Top Like Utility For Monitoring Cuda Activity On A Gpu Stack Overflow

.png)

Tips And Tricks To Optimize Workflow With Tf And Horovod On Gpus Dell United Arab Emirates

Troubleshooting Gcp Cuda Nvidia Docker And Keeping It Running By Thushan Ganegedara Towards Data Science

18 04 Can Somebody Explain The Results For The Nvidia Smi Command In A Terminal Ask Ubuntu

Gpu Running Out Of Memory Vision Pytorch Forums

Checking If Remote Server Is Using Gpu Issue 2181 Pjreddie Darknet Github

Getting Ready For Deep Learning Using Gpus A Setup Guide By Shishir Suman Analytics Vidhya Medium

Can T Get Hw Transcoding Working In Docker With Nvidia Linux Emby Community

Expected Behavior Nvidia Smi Reports Incorrect Memory Usage In Windows 7 8 X64 Cuda Setup And Installation Nvidia Developer Forums

Improving Cnn Training Times In Keras By Dr Joe Logan Medium

Nvidia Usable Memory 4gb Instead Of 6gb Unix Linux Stack Exchange

Profiling And Optimizing Deep Neural Networks With Dlprof And Pyprof Nvidia Developer Blog

Using Gpus With Virtual Machines On Vsphere Part 4 Working With Bitfusion Virtualize Applications

Gpu Nvidia Passthrough On Proxmox Lxc Container Passbe Com

Docker Os Gpu For Apt Installation Recipe

Check Gpu Utilization Paraview Support Paraview

Apt Cuda Nvidia Smi Has Failed Because It Couldn T Communicate With The Nvidia Driver Ask Ubuntu

Andrey S Rants And Notes Like Top But For Gpus

What S The Difference Between Nvidia Smi Memory Usage And Gpu Memory Usage Stack Overflow

Profiling And Optimizing Deep Neural Networks With Dlprof And Pyprof Nvidia Developer Blog

Bitnami Engineering Using Gpus With Kubernetes

Troubleshooting Nvidia Gpu Driver Issues By Andrew Laidlaw Medium

How To Check Cuda Version Easily Varhowto

How To Run Python On Gpu With Cupy Stack Overflow

Drivers Nvidia Smi Problem Ask Ubuntu

Explained Output Of Nvidia Smi Utility By Shachi Kaul Analytics Vidhya Medium

Building The Required Singularity Container By Abhinav Patel Medium

Install Conda Tensorflow Gpu And Keras On Ubuntu 18 04 Mc Ai

Useful Nvidia Smi Commands Learn Cuda Programming

Training Language Model With Nn Dataparallel Has Unbalanced Gpu Memory Usage Fastai Users Deep Learning Course Forums

Dell Poweredge 14g Esxi Returns Failed To Initialize Nvml Unknown Error With Nvidia Gpu Dell Belize

Attach A Gpu To A Linux Vm In Azure Stack Hci Azure Stack Hci Microsoft Docs

Success Nvidia Smi 8x Gpu In Supermicro Superblade Gpu Node Servethehome